Jul

12

25th anniverary of Instituto ITACA

July 12, 2024 | | Comments Off on 25th anniverary of Instituto ITACA

This year, we are celebrating the 25th anniversary of Instituto ITACA.

Congratulations!!

Jul

2

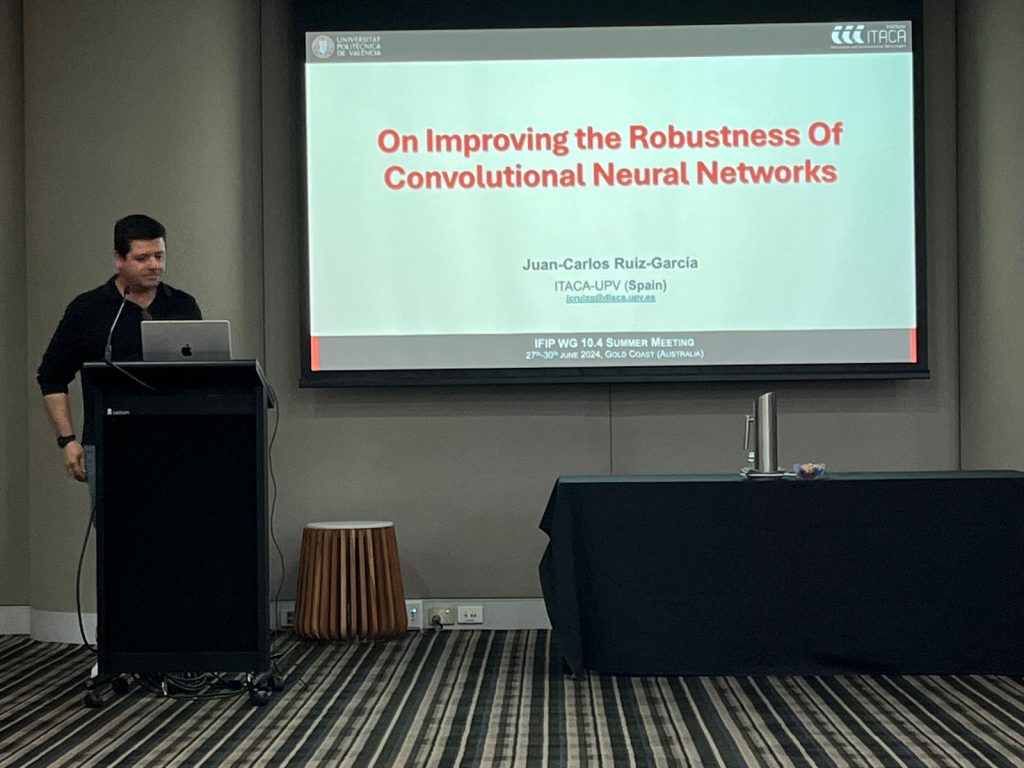

Presentation at IFIP WG 10.4 meeting

July 2, 2024 | | Comments Off on Presentation at IFIP WG 10.4 meeting

Juan C. Ruiz has presented the research work done by the Fault tolerant Systems research group of UPV at 86th IFIP WG 10.4 Meeting at Gold Coast, Australia, entitled “On improving the robustness of convolutional neural networks”.

Jun

28

CEDI 2024

June 28, 2024 | | Comments Off on CEDI 2024

Juan C. Ruiz, Luis J. Saiz and Joaquín Gracia have attended the CEDI 2024, that was held in A Coruña, Spain. During these days, they have contacted with others researches, exchanging ideas and establishing possible future collaborations

Jun

28

IFIP WG 10.4 meeting

June 28, 2024 | | Comments Off on IFIP WG 10.4 meeting

Juan C. Ruiz is attending the IFIP WG 10.4 meeting in Gold Coast, Australia. The IFIP WG 10.4 is aimed at identifying and integrating approaches, methods and techniques for specifying, designing, building, assessing, validating, operating and maintaining computer systems which should exhibit some or all of these attributes.

Jun

26

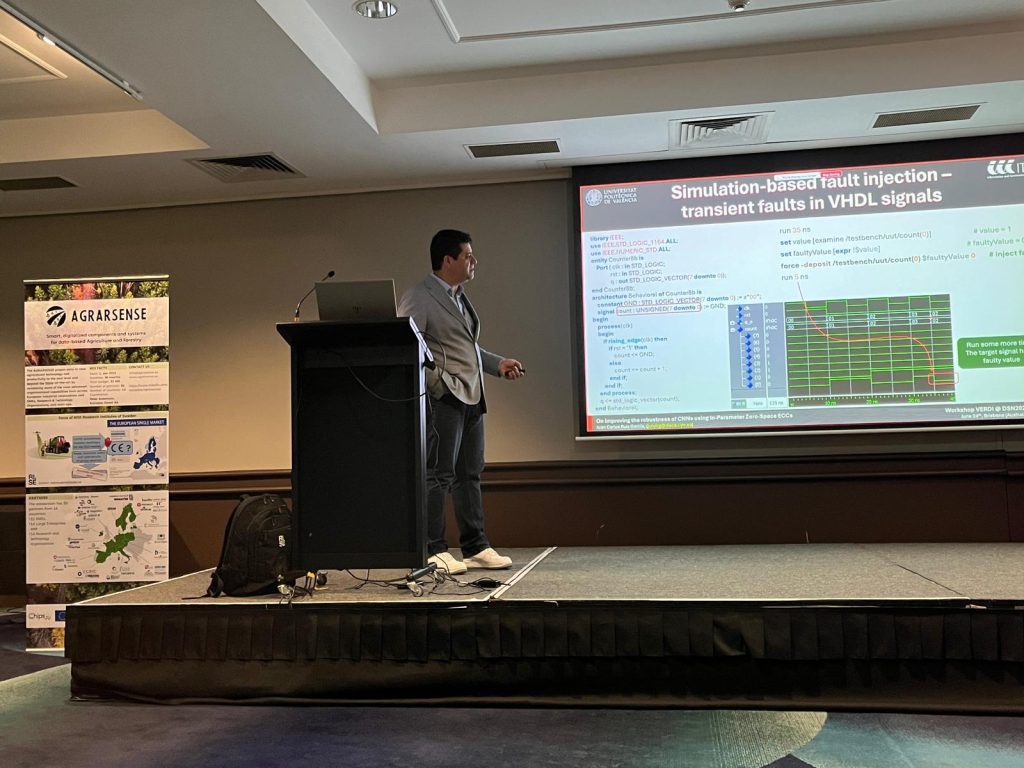

Invited speaker at DNS 2024

June 26, 2024 | | Comments Off on Invited speaker at DNS 2024

This June 24, the VERDI workshop (co-located with DSN 2024) was held in Brisbane, Australia. In this workshop, Juan C. Ruiz has been the invited speaker with a talk entitled “On improving the robustness of convolutional neural networks using in-parameter zero-space error correction codes“.

The program of VERDI 2024 can be seen here.

This talk has been sponsored by the Agencia Estatal de Investigación (Ministerio de Ciencia e Innovación) through the DEFADAS project.

Jun

26

Presentation at Jornadas SARTECO 2024 (III)

June 26, 2024 | | Comments Off on Presentation at Jornadas SARTECO 2024 (III)

J.C. Ruiz-García has presented the paper entitled “Tolerancia a fallos múltiples en redes convolucionales en coma flotante de 16 bits utilizando códigos correctores de errores”, authored by J.C. Ruiz-García, D. de Andrés Martínez, Luis J. Saiz-Adalid and J. Gracia-Morán at Jornadas SARTECO 2024 in A Coruña.

Jun

26

Presentation at Jornadas SARTECO 2024 (II)

June 26, 2024 | | Comments Off on Presentation at Jornadas SARTECO 2024 (II)

J. Gracia-Morán has presented the paper entitled “Protección mediante Códigos de Corrección de Errores de los pesos de una Red Neuronal implementada en Arduino”, authored by J. Gracia-Morán and Luis J. Saiz-Adalid at Jornadas SARTECO 2024 in A Coruña.

Jun

18

Presentation at Jornadas SARTECO 2024 (I)

June 18, 2024 | | Comments Off on Presentation at Jornadas SARTECO 2024 (I)

Luis J. Saiz-Adalid has presented the paper entitled “Estudio de la confiabilidad de una red neuronal convolucional cuantizada”, authored by J. Gracia-Morán, Luis J. Saiz-Adalid, J.C. Ruiz-García, D. de Andrés Martínez at Jornadas SARTECO 2024 in A Coruña.

May

23

Paper available at IEEE early access

May 23, 2024 | | Comments Off on Paper available at IEEE early access

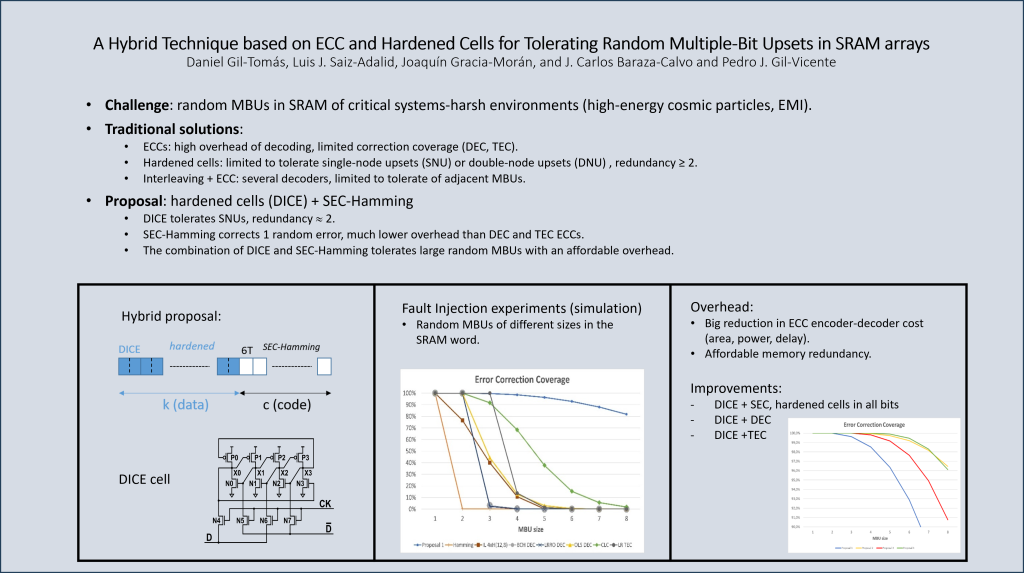

The paper entitled “A Hybrid Technique based on ECC and Hardened Cells for Tolerating Random Multiple-Bit Upsets in SRAM arrays”, written by Daniel Gil-Tomás, Luis J. Saiz-Adalid, Joaquín Gracia-Morán, J. Carlos Baraza-Calvo and Pedro J. Gil-Vicente is now available at IEEE Access.

Abstract:

MBU is an increasing challenge in SRAM memory, due to the chip’s large area of SRAM, and supply power scaling applied to reduce static consumption. Powerful ECCs can cope with random MBUs, but at the expense of complex encoding/decoding circuits, and high memory redundancy. Alternatively, radiation-hardened cell is an alternative technique that can mask single or even double node upsets in the same cell, but at the cost of increasing the overhead of the memory array. The idea of this work is to combine both techniques to take advantage of their respective strengths. To reduce redundancy and encoder/decoder overheads, SEC Hamming ECC has been chosen. About hardened cells, well-known and robust DICE cells, able to tolerate one node upset, have been used. To assess the proposed technique, we have measured the correction capability after a fault injection campaign, as well as the overhead (redundancy, area, power, and delay) of memory and encoding/decoding circuits. Results show high MBU correction coverages with an affordable overhead. For instance, for very harmful 8-bit random MBUs injected in the same memory word, more than 80% of the cases are corrected. Area overhead values of our proposal, measured with respect to double and triple error correction codes, are less than x1.45. To achieve the same correction coverage only with ECCs, redundancy, and overhead would be much higher.

May

13

Paper accepted at IEEE Access

May 13, 2024 | | Comments Off on Paper accepted at IEEE Access

The paper entitled “A Hybrid Technique based on ECC and Hardened Cells for Tolerating Random Multiple-Bit Upsets in SRAM arrays”, written by Daniel Gil-Tomás, Luis J. Saiz-Adalid, Joaquín Gracia-Morán, J. Carlos Baraza-Calvo and Pedro J. Gil-Vicente has been accepted at IEEE Access.

Abstract:

MBU is an increasing challenge in SRAM memory, due to the chip’s large area of SRAM, and supply power scaling applied to reduce static consumption. Powerful ECCs can cope with random MBUs, but at the expense of complex encoding/decoding circuits, and high memory redundancy. Alternatively, radiation-hardened cell is an alternative technique that can mask single or even double node upsets in the same cell, but at the cost of increasing the overhead of the memory array. The idea of this work is to combine both techniques to take advantage of their respective strengths. To reduce redundancy and encoder/decoder overheads, SEC Hamming ECC has been chosen. About hardened cells, well-known and robust DICE cells, able to tolerate one node upset, have been used. To assess the proposed technique, we have measured the correction capability after a fault injection campaign, as well as the overhead (redundancy, area, power, and delay) of memory and encoding/decoding circuits. Results show high MBU correction coverages with an affordable overhead. For instance, for very harmful 8-bit random MBUs injected in the same memory word, more than 80% of the cases are corrected. Area overhead values of our proposal, measured with respect to double and triple error correction codes, are less than x1.45. To achieve the same correction coverage only with ECCs, redundancy, and overhead would be much higher.