Sep

11

Attendance at SAFECOMP 2025

September 11, 2025 | | Comments Off on Attendance at SAFECOMP 2025

Juan Carlos Ruiz is attending SAFECOMP 2025, where he will present the work entitled “Can C-Based ECC Models Leverage High-Level Synthesis? Evaluating Description Variants for Efficient Circuit Implementations”, authored by Joaquín Gracia Morán, Juan-Carlos Ruiz, David de Andrés, and Luis-J. Saiz-Adalid.

He will also introduce the next edition of SafeComp 2026, that will be celebrate in Valencia.

Jul

11

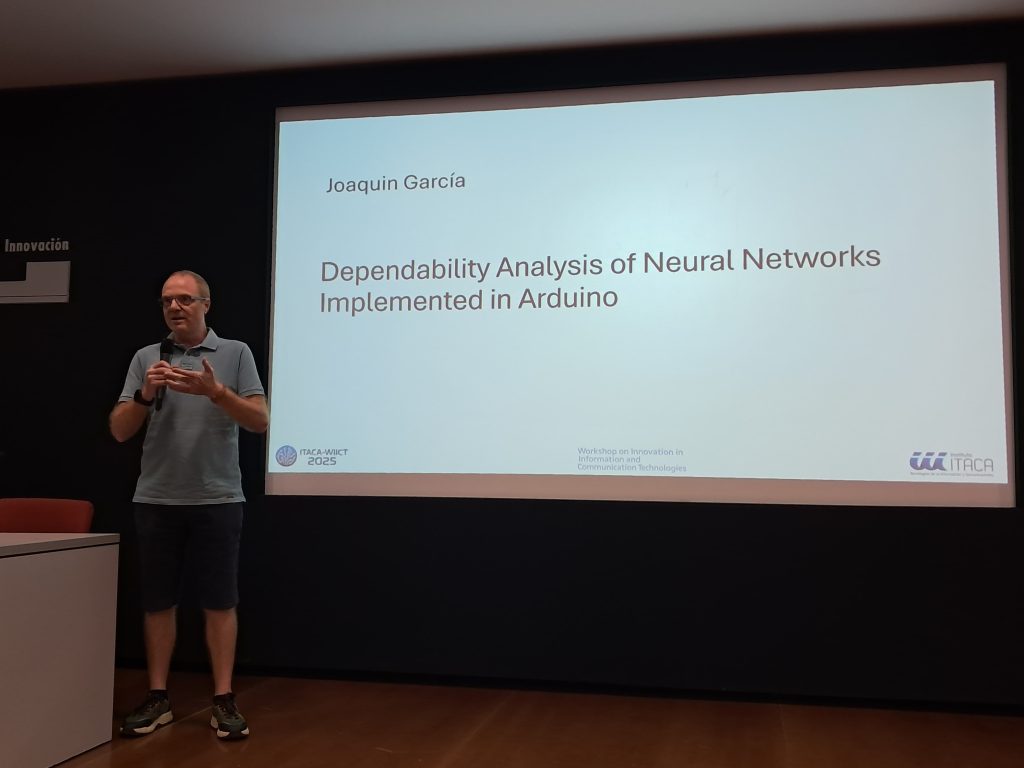

Presentation at WIICT 2025

July 11, 2025 | | Comments Off on Presentation at WIICT 2025

Joaquín Gracia-Morán has presented the work entitled “Dependability analysis of neural networks

implemented in Arduino” at WIICT 2025.

Abstract

The use of neural networks has expanded to environments as diverse as medical systems, industrial devices and space systems. In these cases, it is essential to balance performance, power consumption, and silicon area. Furthermore, in critical environments, it is necessary to ensure high fault tolerance.

Traditionally, the parameters of neural networks have been codified using 32-bit floating-point numbers, which entails high memory consumption and greater vulnerability to failures due to the aggressive scaling of CMOS technology. An effective strategy for optimizing these systems is to reduce parameter precision, using fewer bits and thus, reducing both the amount of memory required and the processing time.

However, several questions arise when implementing these types of networks in embedded systems: Do they maintain their reliability in critical environments, or do they require fault tolerance mechanisms? Are area and latency really reduced?

This work addresses these questions by reducing the precision of a neural network and implementing it in an Arduino-based system. In addition, Error Correction Codes have been incorporated and, using the fault injection technique, their reliability has been evaluated by comparing the same neural network with parameters encoded in 8, 16 and 32 bits.

Jul

1

Attendance at Jornadas SARTECO

July 1, 2025 | | Comments Off on Attendance at Jornadas SARTECO

Researchers from the Fault-Tolerant Systems Group participed in the last Josnadas SARTECO, that were held in Sevilla.

During the event, the group’s researchers presented several oral communications focusing on advances from the national project the DEFADAS (Dependable-enough FPGA-Accelerated DNNs for Automotive Systems) and the project from the Universitat Politècnica de València (Convocatoria A+D, Proyectos de Innovación y Mejora Educativa, PIME/24-25/435). The presentations covered some of the main lines of research of the group:

- Enhance the robustness and reliability of different types of neural networks.

- Design and evaluation of fault-tolerance strategies for neural networks.

Jun

2

Paper accepted at SAFECOMP 2025

June 2, 2025 | | Comments Off on Paper accepted at SAFECOMP 2025

The paper entitled “Can C-Based ECC Models Leverage High-Level Synthesis? Evaluating Description Variants for Efficient Circuit Implementations”, authored by Joaquín Gracia-Morán, Juan-Carlos Ruiz, David de Andrés and Luis-J. Saiz-Adalid has been accepted at SAFECOMP 2025.

Abstract:

Error-Correcting Codes (ECCs) are essential for achieving fault tolerance in hardware accelerators deployed in safety-critical applications, such as those used to accelerate neural network inference and cryptographic processing. High-Level Synthesis (HLS) enables the automatic translation of ECC models described in C/C++ into synthesisable hardware, but the structure and style of such descriptions significantly affect circuit-level efficiency. This work investigates five ECC \emph{coding strategies}, each representing a distinct combination of syndrome computation and correction mechanisms. These strategies are implemented using C++ integers or using arbitrary precision integers provided by the HLS \emph{AP_INT} library. Considered \emph{ECC protection levels} are Single, Double, and Triple-Adjacent Error Correction for \emph{dataword lengths} of 8, 16, and 32 bits. This results in a total of 90 ECC configurations synthesised for both ASIC and FPGA targets, yielding 180 hardware implementations in total. Hardware metrics, including area, power consumption, latency, and resource usage, are evaluated and analysed using analysis of variance (ANOVA) to determine the influence of each design factor. Results indicate that logic-oriented descriptions generally yield more efficient circuits for ASICs, whereas memory-centric implementations perform better on FPGAs equipped with dedicated BRAM. The \emph{AP_INT} library has minimal statistical impact but provides finer control over bit-widths. Based on these findings, a set of high-level modelling guidelines is proposed to guide the efficient high-level implementation of ECCs via HLS.

May

15

Teaching at “Máster en Ingeniería de Sistemas Empotrados”

May 15, 2025 | | Comments Off on Teaching at “Máster en Ingeniería de Sistemas Empotrados”

From 5 to 9 of May, Joaquín Gracia has taught the course “Fiabilidad en Sistemas Empotrados“, belonging to the “Máster en Ingeniería de Sistemas Empotrados” at UPV/EHU.

May

5

Papers accepted at Jornadas SARTECO 2025

May 5, 2025 | | Comments Off on Papers accepted at Jornadas SARTECO 2025

Different papers authored by the GSTF’s members has been accepted at Jornadas SARTECO 2025, that will be held in Sevilla (Spain) next June.

Title: Análisis de la confiabilidad de una red neuronal implementada en Arduino con formato BF16

Authors: Joaquín Gracia-Morán, David de Andrés, Luis-J. Saiz-Adalid, Juan Carlos Ruiz, J.-Carlos Baraza-Calvo, Daniel Gil-Tomás, Pedro J. Gil-Vicente

Abstract: El uso de redes neuronales se ha expandido a entornos tan diversos como dispositivos industriales, sistemas médicos o sistemas espaciales. En estos casos, es fundamental equilibrar rendimiento, consumo energético y área de silicio. Además, en entornos críticos, es necesario garantizar una alta

tolerancia a fallos.

Tradicionalmente, las redes neuronales han utilizado parámetros en coma flotante de 32 bits, lo que implica un alto consumo de memoria y una mayor vulnerabilidad a fallos debido a la miniaturización de la tecnología CMOS. Una estrategia efectiva para optimizar estos sistemas es reducir la precisión de los parámetros, utilizando menos bits y disminuyendo así la cantidad de memoria necesaria y el tiempo de procesamiento.

Sin embargo, surgen dudas al implementar este tipo de redes en sistemas empotrados: ¿Mantienen su confiabilidad en entornos críticos, o requieren mecanismos de tolerancia a fallos? ¿Realmente se reduce el área y la latencia?

Este trabajo aborda estas cuestiones reduciendo la precisión de una red neuronal, e implementándola en un sistema basado en Arduino. Además, se han incorporado Códigos de Corrección de Errores y, mediante la técnica de inyección de fallos, se ha evaluado su confiabilidad comparándola con la misma red, pero con sus parámetros codificados en 32 bits.

Title: Implementación en Arduino de una red neuronal cuantizada tolerante a fallos

Authors: Joaquín Gracia-Morán, David de Andrés, Luis-J. Saiz-Adalid, Juan Carlos Ruiz, J.-Carlos Baraza-Calvo, Daniel Gil-Tomás, Pedro J. Gil-Vicente

Abstract: En la actualidad, las redes neuronales se están utilizando en dominios tan dispares como son los entornos industriales, espaciales y médicos, entornos donde es esencial equilibrar rendimiento, consumo energético y área de silicio. Si, además, estos dispositivos forman parte de un sistema crítico, también se debe garantizar una alta tolerancia a fallos.

Generalmente, los parámetros de las redes neuronales se definen en coma flotante de 32 bits, lo que implica un elevado consumo de memoria. Debido a la miniaturización de la tecnología CMOS, la memoria es más susceptible a los fallos múltiples, lo que puede afectar negativamente a los parámetros de la red neuronal almacenados en memoria.

Para optimizar el uso de memoria y acelerar el procesamiento, una estrategia efectiva es reducir la precisión de los parámetros, codificándolos con menos bits. Sin embargo, al implementar estas redes optimizadas en sistemas empotrados, surgen varios interrogantes: ¿realmente se reduce el área ocupada y la latencia? ¿Siguen siendo confiables en entornos críticos?

Este estudio aborda estas cuestiones. Para ello, se han cuantizado a enteros de 8 bits los parámetros de una red neuronal y se ha implementado en un sistema Arduino, incorporando Códigos de Corrección de Errores. A través de la inyección de fallos, se ha analizado su confiabilidad y comparado con una red con los parámetros en coma flotante de 32 bits. Los resultados ayudarán a evaluar si esta optimización mejora el rendimiento sin comprometer la robustez en aplicaciones críticas.

Title: Hacia la evaluación en software de la robustez de aceleradores hardware para CNN cuantizadas

Authors: Juan Carlos Ruiz, David de Andrés, Juan Carlos Baraza, Luis José Saiz-Adalid, Joaquín Gracia-Morán

Abstract: Gracias a su bajo consumo de memoria y energía, así como a su mayor velocidad de ejecución, las redes neuronales convolucionales cuantizadas son especialmente adecuadas para sistemas empotrados que realizan análisis de imágenes. Estos beneficios aumentan al implementarse sobre aceleradores hardware, generados a partir de modelos software mediante herramientas de síntesis de alto nivel y automatización de diseño electrónico. En sistemas críticos, donde se requieren garantías de seguridad funcional, resulta imprescindible evaluar la robustez de estos aceleradores frente a fallos accidentales y maliciosos que pueden alterar su comportamiento nominal durante su ciclo de vida. Realizar dicha evaluación en fases tempranas del desarrollo de la red reduce costes, pero los modelos software disponibles en dichas etapas rara vez reflejan con precisión el comportamiento del hardware. Este trabajo propone una metodología de inyección de fallos, diseñada para modelos software de redes neuronales convolucionales cuantizadas, que busca reproducir fielmente los efectos que los bit-flips pueden tener en el proceso de inferencia de la red una vez que ´esta es implementada sobre un acelerador hardware. La metodología se valida con una versión cuantizada de LeNet descrita en Python. Esta contribución sienta las bases para una evaluación temprana y representativa de la robustez de redes convolucionales cuantizadas, con el objetivo de facilitar el diseño de soluciones futuras de inteligencia artificial embebida más seguras y confiables.

Apr

27

Attendance at the EDCC

April 27, 2025 | | Comments Off on Attendance at the EDCC

The ITACA Institute has echoed the attendance of GSTF researchers at EDCC 2025. The complete information can be seen here.

Apr

15

Poster award at EDCC 2025

April 15, 2025 | | Comments Off on Poster award at EDCC 2025

The poster entitled “Towards a novel 8-bit floating point format to increase robustness in CNNs”, written by Luis J. Saiz-Adalid, has been awarded as Distinguished Poster at EDCC 2025.

Congratulations!!

Apr

15

Paper award

April 15, 2025 | | Comments Off on Paper award

The paper entitled “A Hybrid Technique based on ECC and Hardened Cells for Tolerating Random Multiple-Bit Upsets in SRAM arrays“, written by Daniel Gil-Tomás, Luis J. Saiz-Adalid, Joaquín Gracia-Morán, J. Carlos Baraza-Calvo and Pedro J. Gil-Vicente has been awarded by the third prize for the publication with the highest impact factor 2024 from the ITACA Institute.

Abstract:

MBU is an increasing challenge in SRAM memory, due to the chip’s large area of SRAM, and supply power scaling applied to reduce static consumption. Powerful ECCs can cope with random MBUs, but at the expense of complex encoding/decoding circuits, and high memory redundancy. Alternatively, radiation-hardened cell is an alternative technique that can mask single or even double node upsets in the same cell, but at the cost of increasing the overhead of the memory array. The idea of this work is to combine both techniques to take advantage of their respective strengths. To reduce redundancy and encoder/decoder overheads, SEC Hamming ECC has been chosen. About hardened cells, well-known and robust DICE cells, able to tolerate one node upset, have been used. To assess the proposed technique, we have measured the correction capability after a fault injection campaign, as well as the overhead (redundancy, area, power, and delay) of memory and encoding/decoding circuits. Results show high MBU correction coverages with an affordable overhead. For instance, for very harmful 8-bit random MBUs injected in the same memory word, more than 80% of the cases are corrected. Area overhead values of our proposal, measured with respect to double and triple error correction codes, are less than x1.45. To achieve the same correction coverage only with ECCs, redundancy, and overhead would be much higher.

Apr

14

Participation in the EDCC 2025

April 14, 2025 | | Comments Off on Participation in the EDCC 2025

Several members of the group have traveled to Lisbon to participate in EDCC 2025, where they have presented the future work we are doing.